How do we make automated tests useful to a team?

I’ve found that as a Test Automation Engineer, it’s incredibly easy to write many, many automated functional tests for an application. Throughout my career, I myself have written thousands of different front end tests, each one being very robust when run in isolation, and (for the most part!) each one being well thought out and necessary. One of the things I’ve been working on over the past couple of years is what I can do to then make those tests as useful as possible for the teams I work with.

For me, a useful automated test runs quickly, gives consistent output and tells you whether or not the application under test is suitable for release. When writing automated tests, I try to ensure that automation is a sustainable and embedded part of a team’s daily working practises. The tests must be visible and well understood by the team I’m working with. At any point in time, my team must be able to ask one question of the automated tests: Can we go live? And they should expect a timely and reliable response from those tests.

Easy to navigate

One of the things that I ask of people I’m working with is to run tests locally before checking in their changes. This usually means that a team member needs to do two things. Firstly, they must understand the impact of the changes they have made, in order to identify a suitable subset of the tests to be run. Secondly, they must be able to find and then run those tests easily and quickly.

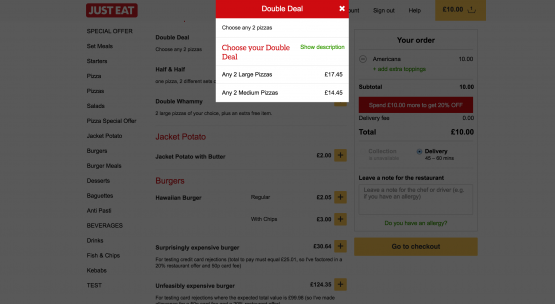

There’s a lot to be said for arranging your tests sensibly, in well structured directories, and to have sensible naming conventions, however this is a small part of solving this issue. It’s usually a good idea to have a wide range of people involved in defining the feature files from the beginning of your process. If developers, product managers and testers work together on defining the feature files then you have more chance that these people will describe the features using the same language. This makes it easier for people who are less familiar with the tests to find the ones they need to run for a particular change. In my current project, for example, we have this modal appearing as part of our user flow:

Initially there were various names being bandied around for this modal. Some referred to it as the ‘Meal picker modal’, some called it the ‘Double deal light box’, and some of us just called it ‘That modal that appears, you know, when you select a complex item, you know, the thing with the red header’. Not ideal. Luckily, by working together to define this piece of functionality, we all now refer to it as the ‘Meal deal modal’, and our conversations about it (we do spend a worrying amount of time talking about it) are much more straight forward!

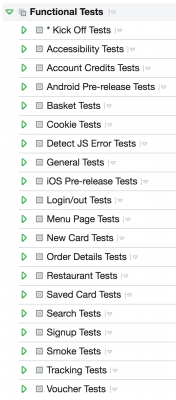

I also like to keep the barrier to entry for my automation tests as low as possible. If someone on your team finds it difficult to locate, set up and then run the tests, they most likely are just not going to bother. And that means the tests are not going to be useful for them. Making the setup and running of the tests as easy as possible is going to make running the tests less of a pain, and it will give the people on your team one less reason to not bother with the functional tests. In the JUST EAT functional tests, we have a rake file, that looks something like this:

task :install do

system('bundle install --no-cache --binstubs --path vendor/bundle')

end

task :test do

system 'bundle exec cucumber --tags @mechanize --tags ~@manual --tags ~@not_local'

end

This gives the team members one command to set up the framework (which will only need to be run once) and then one command to run the tests. Easy!

Zero tolerance to flaky tests

I have a zero tolerance approach to flaky tests. If a test fails and there isn’t a bug in the application? Delete it. My reasoning for this is that flaky tests tend to result in people (including myself) losing faith in the tests – and that for me is the most damaging thing that can happen for a suite of automated tests. I’ve definitely written and owned test frameworks before that when a test failed, I instantly started looking into the test code to figure out what I’d done wrong, instead of checking the application. After a few hours of digging around in the test code, when I actually bothered to check the application and realised that it was a bug that was causing the test to fail, I was pleasantly surprised. If you are ever pleasantly surprised that your tests are failing for a real bug, then you may already have an issue with the usefulness of your tests.

Fast execution time

If your tests take hours to run, then you may also have issues with how useful these tests are. I like to use a measurement called the Developer Boredom Threshold (DBT) to measure the execution time of my test. This super accurate metric is a bit of unmath that I like to use to indicate how long a developer on a team will wait for tests to run before getting bored and deploying to production anyway. From in depth testing and years of research I have estimated the average DBT is around ten minutes, and for that reason, I try to not have tests that take longer to run than that.

When I joined JUST EAT we had a suite of tests for the web app that would take around two hours to run, plus a further hour in which to ascertain if the failures were ‘genuine failures’ or ‘just another issue with the tests’. The first thing I did with this test suite was to quarantine all the failing tests and investigate them. I also cleaned up a lot of the scenarios, removing duplicates and invalid ones where necessary. I then introduced Mechanize, as a way to headlessly test some of the scenarios that didn’t require javascript – Mechanize tests can run very fast, and very reliably. I also invested in some additional AWS agents, and rearranged my tests into logical functional areas so that they could easily be run in parallel. This has been a long journey, involving lots of time from my team, but we are now in a much better place. The tests run robustly across 12 agents, taking no longer than seven minutes. This is what a good day for the web app looks like:

High quality test code

I used to be of the opinion that test code quality was completely useless and not necessary. However, about a year ago, I was curious about how good my ruby code was so I ran a tool called Rubocop over my code. Rubocop checks Ruby code for adherence to the Ruby community standards and guidelines, and can be found here: https://github.com/bbatsov/rubocop. I was pretty confident that my ruby was pretty amazing anyway, so I ran Rubocop. It came back with over 5,000 ways in which I was offending the ruby community! Since that day, I have always used Rubocop – and surprisingly, when you write Ruby in a more efficient and higher quality way, your tests run faster!

In summary then, vast swathes of automated tests that randomly pass; are only known about by your QA team; and surprise you when they find a bug are not going to be useful to your team. The first step towards useful tests is to get one robust, easy to read and easy to run test and then get your team in the habit of looking at it before releasing, and running it before committing. If they can learn this behaviour, then introduce a second test and then a third. Before you know it, your automation will be catching bugs left, right and centre. #minifistpump